Join gaming leaders online at GamesBeat Summit Next this upcoming November 9-10. Learn more about what comes next.

Nvidia CEO Jensen Huang boasted of many world-changing technologies in his keynote at Tuesday’s GPU Technology Conference (GTC), but he chose to close on a promise to help save the world.

“We will build a digital twin to simulate and predict climate change,” he said, framing it as a tool for understanding how to mitigate climate change’s effects. “This new supercomputer will be E2, Earth 2, the digital twin of Earth, running Modulus-created AI physics at a million times speeds in the Omniverse. All the technologies we’ve invented up to this moment are needed to make E2 possible. I can’t imagine greater and more important news.”

Utilizing digital twins to model improvements for the real world

Consider this, Nvidia’s goal for 2021 — a stretch challenge that ultimately feeds into not just scientific computing but Nvidia’s ambition to transform into a full-stack computing company. Although he spent a lot of time talking up the Omniverse, Nvidia’s concept for connected 3D worlds, Huang wanted to make clear that it’s not intended as a mere digital playground but also a place to model improvements in the real world. “Omniverse is different from a gaming engine. Omniverse is built to be data center scale and hopefully, eventually, planetary scale,” he said.

Earth 2 is meant to be the next step beyond Cambridge-1, the $100 million supercomputer Nvidia launched in June and made available to health care researchers in the U.K. Nvidia pursed that effort in partnership with drug development and academic researchers, with participation from GSK and AstraZeneca. Nvidia press contacts declined to provide more details about who else might be involved in Earth 2, although Jensen may say more in a press conference scheduled for Wednesday.

The lack of detail left some wondering if E2 was for real. Tech analyst Addison Snell tweeted, “I believe the statement was meant to be visionary. If it’s a real initiative, I have questions, which I will ask after a good night’s sleep.”

By definition, a supercomputer is many times more powerful than the general-purpose computers used for ordinary business applications. That means the definition of what constitutes a supercomputer keeps changing, as performance trickles down into general-purpose computing — to the point where an iPhone of today is said to be more powerful than the IBM supercomputer that beat chess master Gary Kasporov in 1997 and far more powerful than the supercomputers used to guide the Apollo mission in the 1970s.

Battling climate change with Earth’s digital twin

Many of the advances Nvidia announced are aimed at making very high-performance computing more broadly available, for example by allowing businesses to tap into it as a cloud service and apply it to purposes such as zero-trust computing.

Today’s supercomputers are typically built out of large arrays of servers running Linux, wired together with very fast interconnects. As supercomputing centers begin opening access to more researchers — and cloud computing providers begin offering supercomputing services — Nvidia’s Quantum-2 platform, available now, offers an important change in supercomputer architecture, Huang said.

“Quantum-2 is the first networking platform to offer the performance of a supercomputer and the shareability of cloud computing,” Huang said. “This has never been possible before. Until Quantum-2, you get either bare metal high performance or secure multi-tenancy, never both. With Quantum-2, your valuable supercomputer will be cloud-native and far better utilized,”

Quantum-2 consists of a 400Gbps InfiniBand networking platform that consists of the Nvidia Quantum-2 switch, the ConnectX-7 network adapter, the BlueField-3 data processing unit (DPU), and supporting software.

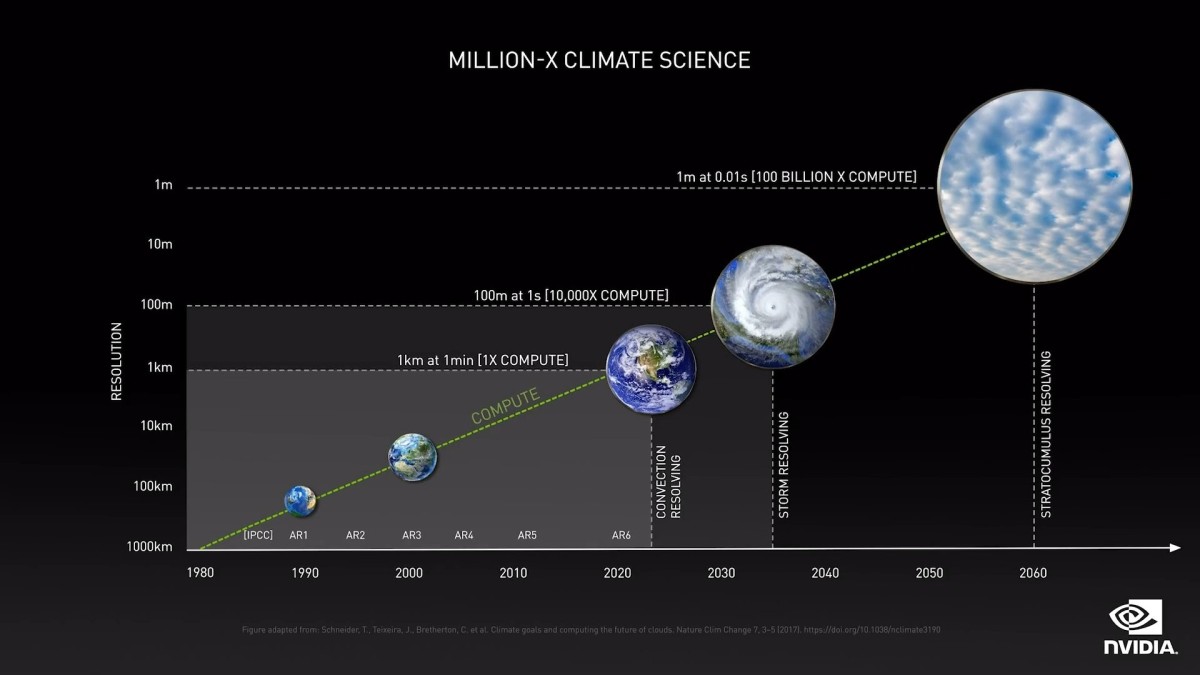

Nvidia did not detail the architecture of E2, but Huang said modeling the climate of the earth in enough detail to make accurate predictions ten, 20, or 30 years in the future is a devilishly hard problem.

“Climate simulation is much harder than weather simulation, which largely models atmospheric physics — and the accuracy of the model can be validated every few days. Long-term climate prediction must model the physics of Earth’s atmosphere, oceans and waters, ice, the land, and human activities and all of their interplay. Further, simulation resolutions of one to ten meters are needed to incorporate effects like low atmospheric clouds that reflect the sun’s radiation back to space.”

Nvidia is tackling this issue using its new Modulus framework for developing physics machine learning models. Progress is sorely needed, given how fast the Earth’s climate is changing, for example with evaporation-induced droughts and drinking water reservoirs that have dropped by as much as 150 feet.

“To develop strategies to mitigate and adapt is arguably one of the greatest challenges facing society today,” Huang said. “The combination of accelerated computing, physics ML, and giant computer systems can give us a million times leap — and give us a shot.”

VentureBeat

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative technology and transact.

Our site delivers essential information on data technologies and strategies to guide you as you lead your organizations. We invite you to become a member of our community, to access:

- up-to-date information on the subjects of interest to you

- our newsletters

- gated thought-leader content and discounted access to our prized events, such as Transform 2021: Learn More

- networking features, and more