Machine learning models are as good as the data they’re trained on.

Without high-quality training data, even the most efficient machine learning algorithms will fail to perform.

The need for quality, accurate, complete, and relevant data starts early on in the training process. Only if the algorithm is fed with good training data can it easily pick up the features and find relationships that it needs to predict down the line.

More precisely, quality training data is the most significant aspect of machine learning (and artificial intelligence) than any other. If you introduce the machine learning (ML) algorithms to the right data, you’re setting them up for accuracy and success.

What is training data?

Training data is the initial dataset used to train machine learning algorithms. Models create and refine their rules using this data. It’s a set of data samples used to fit the parameters of a machine learning model to training it by example.

Training data is also known as training dataset, learning set, and training set. It’s an essential component of every machine learning model and helps them make accurate predictions or perform a desired task.

Simply put, training data builds the machine learning model. It teaches what the expected output looks like. The model analyzes the dataset repeatedly to deeply understand its characteristics and adjust itself for better performance.

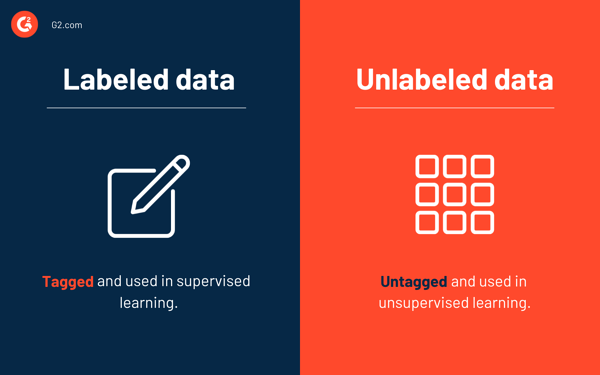

In a broader sense, training data can be classified into two categories: labeled data and unlabeled data.

What is labeled data?

Labeled data is a group of data samples tagged with one or more meaningful labels. It’s also called annotated data, and its labels identify specific characteristics, properties, classifications, or contained objects.

For example, the images of fruits can be tagged as apples, bananas, or grapes.

Labeled training data is used in supervised learning. It enables ML models to learn the characteristics associated with specific labels, which can be used to classify newer data points. In the example above, this means that a model can use labeled image data to understand the features of specific fruits and use this information to group new images.

Data labeling or annotation is a time-consuming process as humans need to tag or label the data points. Labeled data collection is challenging and expensive. It isn’t easy to store labeled data when compared to unlabeled data.

What is unlabeled data?

As expected, unlabeled data is the opposite of labeled data. It’s raw data or data that’s not tagged with any labels for identifying classifications, characteristics, or properties. It’s used in unsupervised machine learning, and the ML models have to find patterns or similarities in the data to reach conclusions.

Going back to the previous example of apples, bananas, and grapes, in unlabeled training data, the images of those fruits won’t be labeled. The model will have to evaluate each image by looking at its characteristics, such as color and shape.

After analyzing a considerable number of images, the model will be able to differentiate new images (new data) into the fruit types of apples, bananas, or grapes. Of course, the model wouldn’t know that the particular fruit is called an apple. Instead, it knows the characteristics needed to identify it.

There are hybrid models that use a combination of supervised and unsupervised machine learning.

How training data is used in machine learning

Unlike machine learning algorithms, traditional programming algorithms follow a set of instructions to accept input data and provide output. They don’t rely on historical data, and every action they make is rule-based. This also means that they don’t improve over time, which isn’t the case with machine learning.

For machine learning models, historical data is fodder. Just as humans rely on past experiences to make better decisions, ML models look at their training dataset with past observations to make predictions.

Predictions could include classifying images as in the case of image recognition, or understanding the context of a sentence as in natural language processing (NLP).

Think of a data scientist as a teacher, the machine learning algorithm as the student, and the training dataset as the collection of all textbooks.

The teacher’s aspiration is that the student must perform well in exams and also in the real world. In the case of ML algorithms, testing is like exams. The textbooks (training dataset) contain several examples of the type of questions that’ll be asked in the exam.

Tip: Check out big data analytics to know how big data is collected, structured, cleaned, and analyzed.

Of course, it won’t contain all the examples of questions that’ll be asked in the exam, nor will all the examples included in the textbook will be asked in the exam. The textbooks can help prepare the student by teaching them what to expect and how to respond.

No textbook can ever be fully complete. As time passes, the kind of questions asked will change, and so, the information included in the textbooks needs to be changed. In the case of ML algorithms, the training set should be periodically updated to include new information.

In short, training data is a textbook that helps data scientists give ML algorithms an idea of what to expect. Although the training dataset doesn’t contain all possible examples, it’ll make algorithms capable of making predictions.

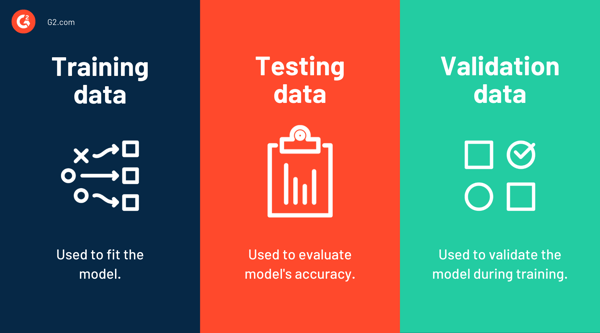

Training data vs. test data vs. validation data

Training data is used in model training, or in other words, it’s the data used to fit the model. On the contrary, test data is used to evaluate the performance or accuracy of the model. It’s a sample of data used to make an unbiased evaluation of the final model fit on the training data.

A training dataset is an initial dataset that teaches the ML models to identify desired patterns or perform a particular task. A testing dataset is used to evaluate how effective the training was or how accurate the model is.

Once an ML algorithm is trained on a particular dataset and if you test it on the same dataset, it’s more likely to have high accuracy because the model knows what to expect. If the training dataset contains all possible values the model might encounter in the future, all well and good.

But that’s never the case. A training dataset can never be comprehensive and can’t teach everything that a model might encounter in the real world. Therefore a test dataset, containing unseen data points, is used to evaluate the model’s accuracy.

Then there’s validation data. This is a dataset used for frequent evaluation during the training phase. Although the model sees this dataset occasionally, it doesn’t learn from it. The validation set is also referred to as the development set or dev set. It helps protect models from overfitting and underfitting.

Although validation data is separate from training data, data scientists might reserve a part of the training data for validation. But of course, this automatically means that the validation data was kept away during the training.

Tip: If you’ve got a limited amount of data, a technique called cross-validation can be used to estimate the model’s performance. This method involves randomly partitioning the training data into multiple subsets and reserving one for evaluation.

Many use the terms “test data” and “validation data” interchangeably. The main difference between the two is that validation data is used to validate the model during the training, while the testing set is used to test the model after the training is completed.

The validation dataset gives the model the first taste of unseen data. However, not all data scientists perform an initial check using validation data. They might skip this part and go directly to testing data.

What is human in the loop?

Human in the loop refers to the people involved in the gathering and preparation of training data.

Raw data is gathered from multiple sources, including IoT devices, social media platforms, websites, and customer feedback. Once collected, individuals involved in the process would determine the crucial attributes of the data that are good indicators of the outcome you want the model to predict.

The data is prepared by cleaning it, accounting for missing values, removing outliers, tagging data points, and loading it into suitable places for training ML algorithms. There will also be several rounds of quality checks; as you know, incorrect labels can significantly affect the model’s accuracy.

What makes training data good?

High-quality data translates to accurate machine learning models.

Low-quality data can significantly affect the accuracy of models, which can lead to severe financial losses. It’s almost like giving a student a textbook containing wrong information and expecting them to excel in the examination.

The following are the four primary traits of quality training data.

Relevant

The data needs to be relevant to the task at hand. For example, if you want to train a computer vision algorithm for autonomous vehicles, you probably won’t require images of fruits and vegetables. Instead, you would need a training dataset containing photos of roads, sidewalks, pedestrians, and vehicles.

Representative

The AI training data must have the data points or features that the application is made to predict or classify. Of course, the dataset can never be absolute, but it must have at least the attributes the AI application is meant to recognize.

For example, if the model is meant to recognize faces within images, it must be fed with diverse data containing people’s faces from various ethnicities. This will reduce the problem of AI bias, and the model won’t be prejudiced against a particular race, gender, or age group.

Uniform

All data should have the same attribute and must come from the same source.

Suppose your machine learning project aims to predict churn rate by looking at customer information. For that, you’ll have a customer information database that includes customer name, address, number of orders, order frequency, and other relevant information. This is historical data and can be used as training data.

One part of the data can’t have additional information, such as age or gender. This will make training data incomplete and the model inaccurate. In short, uniformity is a critical aspect of quality training data.

Comprehensive

Again, the training data can never be absolute. But it should be a large dataset that represents the majority of the model’s use cases. The training data must have enough examples that’ll allow the model to learn appropriately. It must contain real-world data samples as it will help train the model to understand what to expect.

If you’re thinking of training data as values placed in large numbers of rows and columns, sorry, you’re wrong. It could be any data type like text, images, audio, or videos.

What affects training data quality?

Humans are highly social creatures, but there are some prejudices that we might have picked as children and require constant conscious effort to get rid of. Although unfavorable, such biases may affect our creations, and machine learning applications are no different.

For ML models, training data is the only book they read. Their performance or accuracy will depend on how comprehensive, relevant, and representative the very book is.

That being said, three factors affect the quality of training data:

-

People: The people who train the model have a significant impact on its accuracy or performance. If they’re biased, it’ll naturally affect how they tag data and, ultimately, how the ML model functions.

-

Processes: The data labeling process must have tight quality control checks in place. This will significantly increase the quality of training data.

-

Tools: Incompatible or outdated tools can make data quality suffer. Using robust data labeling software can reduce the cost and time associated with the process.

Where to get training data

There are several ways to get training data. Your choice of sources can vary depending on the scale of your machine learning project, the budget, and the time available. The following are the three primary sources for collecting data.

Open-source training data

Most amateur ML developers and small businesses that can’t afford data collection or labeling rely on open-source training data. It’s an easy choice as it’s already collected and free. However, you’ll most probably have to tweak or re-annotate such datasets to fit your training needs. ImageNet, Kaggle, and Google Dataset Search are some examples of open-source datasets.

Internet and IoT

Most mid-sized companies collect data using the internet and IoT devices. Cameras, sensors, and other intelligent devices help collect raw data, which will be cleaned and annotated later. This data collection method will be specifically tailored to your machine learning project’s requirements, unlike open-source datasets. However, cleaning, standardizing, and labeling the data is a time-consuming and resource-intensive process.

Artificial training data

As the name suggests, artificial training data is artificially created data using machine learning models. It’s also called synthetic data, and it’s an excellent choice if you require good quality training data with specific features for training an algorithm. Of course, this method will require large amounts of computational resources and ample time.

How much training data is enough?

There isn’t a specific answer to how much training data is enough training data. It depends on the algorithm you’re training – its expected outcome, application, complexity, and many other factors.

Suppose you want to train a text classifier that categorizes sentences based on the occurrence of the terms “cat” and “dog” and their synonyms such as “kitty,” “kitten,” “pussycat,” “puppy,” or “doggy”. This might not require a large dataset as there are only a few terms to match and sort.

But, if this was an image classifier that categorized images as “cats” and “dogs,” the number of data points needed in the training dataset would shoot up significantly. In short, many factors come into play to decide what training data is enough training data.

The amount of data required will change depending on the algorithm used.

For context, deep learning, a subset of machine learning, requires millions of data points to train the artificial neural networks (ANNs). In contrast, machine learning algorithms require only thousands of data points. But of course, this is a far-fetched generalization as the amount of data needed varies depending on the application.

The more you train the model, the more accurate it becomes. So it’s always better to have a large amount of data as training data.

Garbage in, garbage out

The phrase “garbage in, garbage out” is one of the oldest and most used phrases in data science. Even with the rate of data generation growing exponentially, it still holds true.

The key is to feed high-quality, representative data to machine learning algorithms. Doing so can significantly enhance the accuracy of models. Good quality training data is also crucial for creating unbiased machine learning applications.

Ever wondered what computers with human-like intelligence would be capable of? The computer equivalent of human intelligence is known as artificial general intelligence, and we’re yet to conclude whether it will be the greatest or the most dangerous invention ever.